preview

We're still working on this feature, but we'd love for you to try it out!

This feature is currently provided as part of a preview program pursuant to our pre-release policies.

New Relic supports monitoring Kubernetes workloads on EKS Fargate by automatically injecting a sidecar containing the infrastructure agent and the nri-kubernetes integration in each pod that needs to be monitored.

If the same Kubernetes cluster also contains EC2 nodes, our solution will also be deployed as a DaemonSet in all of them. No sidecar will be injected into pods scheduled in EC2 nodes, and no DaemonSet will be deployed to Fargate nodes. Here's an example of a hybrid instance with both Fargate and EC2 nodes:

In a mixed environment, the integration only uses a sidecar for Fargate nodes.

New Relic collects all the supported metrics for all Kubernetes objects regardless of where they are scheduled, whether it's Fargate or EC2 nodes. Please note that, due to the limitations imposed by Fargate, the New Relic integration is limited to running in unprivileged mode on Fargate nodes. This means that metrics that are usually fetched from the host directly, like running processes, will not be available for Fargate nodes.

The agent in both scenarios will scrape data from Kube State Metrics (KSM), Kubelet, and cAdvisor and send data in the same format.

중요

Just like for any other Kubernetes cluster, our solution still requires you to deploy and monitor a Kube State Metrics (KSM) instance. Our Helm Chart and/or installer will do so automatically by default, although this behavior can be disabled if your cluster already has a working instance of KSM. This KSM instance will be monitored as any other workload: By injecting a sidecar if it gets scheduled in a Fargate node or with the local instance of the DaemonSet if it gets scheduled on an EC2 node.

Other components of the New Relic solution for Kubernetes, such as nri-prometheus, nri-metadata-injection, and nri-kube-events, do not have any particularities and will be deployed by our Helm Chart normally as they would in non-Fargate environments.

You can choose between two alternatives for installing New Relic full observability in your EKS Fargate cluster:

Regardless of the approach you choose, the experience is exactly the same after it's installed. The only difference is how the container is injected. We do recommend setting up automatic injection with the New Relic infrastructure monitoring operator because it will eliminate the need to manually edit each deployment you want to monitor.

Automatic injection (recommended)

By default, when Fargate support is enabled, New Relic will deploy an operator to the cluster (newrelic-infra-operator). Once deployed, this operator will automatically inject the monitoring sidecar to pods that are scheduled into Fargate nodes, while also managing the creation and the update of Secrets, ClusterRoleBindings, and any other related resources.

This operator accepts a variety of advanced configuration options that can be used to narrow or widen the scope of the injection, through the use of label selectors for both pods and namespaces.

What the operator does

Behind the scenes, the operator sets up a MutatingWebhookConfiguration, which allows it to modify the pod objects that are about to be created in the cluster. On this event, and when the pod being created matches the user’s configuration, the operator will:

- Add a sidecar container to the pod containing the New Relic Kubernetes integration.

- If a secret doesn't exist, create one in the same namespace as the pod containing the New Relic , which is needed for the sidecar to report data.

- Add the pod's service account to a

ClusterRoleBindingpreviously created by the operator chart, which will grant this sidecar the required permissions to hit the Kubernetes metrics endpoints.

The ClusterRoleBinding grants the following permissions to the pod being injected:

- apiGroups: [""] resources: - "nodes" - "nodes/metrics" - "nodes/stats" - "nodes/proxy" - "pods" - "services" - "namespaces" verbs: ["get", "list"]- nonResourceURLs: ["/metrics"] verbs: ["get"]팁

In order for the sidecar to be injected, and therefore to get metrics from pods deployed before the operator has been installed, you need to manually perform a rollout (restart) of the affected deployments. This way, when the pods are created, the operator will be able to inject the monitoring sidecar. New Relic has chosen not to do this automatically in order to prevent unexpected service disruptions and resource usage spikes.

중요

Remember to create a Fargate profile with a selector that declares the newrelic namespace (or the namespace you choose for the installation).

Here's the injection workflow:

팁

The following steps are for a default setup. Before completing these, we suggest you take a look at the Configuration section below to see if you want to modify any aspects of the automatic injection.

Add the New Relic Helm repository

If you haven't done so before, run this command to add the New Relic Helm repository:

$helm repo add newrelic https://helm-charts.newrelic.comCreate a file named values.yaml

To install the operator in charge of injecting the infrastructure sidecar, create a file named values.yaml. This file will define your configuration:

## Global values

global:# -- The cluster name for the Kubernetes cluster.cluster: "_YOUR_K8S_CLUSTER_NAME_"

# -- The license key for your New Relic Account. This will be preferred configuration option if both `licenseKey` and `customSecret` are specified.licenseKey: "_YOUR_NEW_RELIC_LICENSE_KEY_"

# -- (bool) In each integration it has different behavior. Enables operating system metric collection on each EC2 K8s node. Not applicable to Fargate nodes.# @default -- falseprivileged: true

# -- (bool) Must be set to `true` when deploying in an EKS Fargate environment# @default -- falsefargate: true

## Enable nri-bundle sub-charts

newrelic-infra-operator:# Deploys the infrastructure operator, which injects the monitoring sidecar into Fargate podsenabled: truetolerations: - key: "eks.amazonaws.com/compute-type" operator: "Equal" value: "fargate" effect: "NoSchedule"config: ignoreMutationErrors: true infraAgentInjection: # Injection policies can be defined here. See [values file](https://github.com/newrelic/newrelic-infra-operator/blob/main/charts/newrelic-infra-operator/values.yaml#L114-L125) for more detail. policies: - namespaceName: namespace-a - namespaceName: namespace-b

newrelic-infrastructure:# Deploys the Infrastructure Daemonset to EC2 nodes. Disable for Fargate-only clusters.enabled: true

nri-metadata-injection:# Deploy our mutating admission webhook to link APM and Kubernetes entitiesenabled: true

kube-state-metrics:# Deploys Kube State Metrics. Disable if you are already running KSM in your cluster.enabled: true

nri-kube-events:# Deploy the Kubernetes events integration.enabled: true

newrelic-logging:# Deploys the New Relic's Fluent Bit daemonset to EC2 nodes. Disable for Fargate-only clusters.enabled: true

newrelic-prometheus-agent:# Deploys the Prometheus agent for scraping Prometheus endpoints.enabled: trueconfig: kubernetes: integrations_filter: enabled: true source_labels: ["app.kubernetes.io/name", "app.newrelic.io/name", "k8s-app"] app_values: ["redis", "traefik", "calico", "nginx", "coredns", "kube-dns", "etcd", "cockroachdb", "velero", "harbor", "argocd", "istio"]Deploy

After creating and tweaking the file, you can deploy the solution using this Helm command:

$helm upgrade --install newrelic-bundle newrelic/nri-bundle -n newrelic --create-namespace -f values.yaml중요

When deploying the solution on a hybrid cluster (with both EC2 and Fargate nodes), please make sure that the solution is not selected by any Fargate profiles; otherwise, the DaemonSet instances will be stuck in a pending state. For fargate-only environments this is not a concern because no DaemonSet instances are created.

Automatic injection: Known limitations

Here are some issues to be aware of when using automatic injection:

Currently there is no controller that watches the whole cluster to make sure that secrets that are no longer needed are garbage collected. However, all objects share the same label that you can use to remove all resources, if needed. We inject the label

newrelic/infra-operator-created: true, which you can use to delete resources with a single command.At the moment, it's not possible to use the injected sidecar to monitor services running in the pod. The sidecar will only monitor Kubernetes itself. However, advanced users might want to exclude these pods from automatic injection and manually inject a customized version of the sidecar with on-host integrations enabled by configuring them and mounting their configurations in the proper place. For help, see this tutorial.

Automatic injection: Configuration

You can configure different aspects of the automatic injection. By default, the operator will inject the monitoring sidecar to all pods deployed in Fargate nodes which are not part of a Job or a BatchJob.

This behavior can be changed through configuration options. For example, you can define selectors to narrow or widen the selection of pods that are injected, assign resources to the operator, and tune the sidecar. Also, you can add other attributes, labels, and environment variables. Please refer to the chart README.md and values.yaml.

중요

Specifying your own custom injection rules will discard the default ruleset that prevents sidecar injection on pods that are not scheduled in Fargate. Please ensure that your custom rules have the same effect; otherwise, on hybrid clusters which also have the DaemonSet deployed, pods scheduled in EC2 will be monitored twice, leading to incorrect or duplicate data.

Update to the latest version or to a new configuration

To update to the latest version of the EKS Fargate integration, upgrade the Helm repository using helm repo update newrelic and reinstall the bundle by simply running again the command above.

To update the configuration of the infrastructure agent injected or the operator itself, modify the values-newrelic.yaml and upgrade the Helm release with the new configuration. The operator is updated immediately, and your workloads will be instrumented with the new version on their next restart. If you wish to upgrade them immediately, you can force a restart of your workloads by running:

$kubectl rollout restart deployment YOUR_APPAutomatic injection: Uninstall

In order to uninstall the sidecar performing the automatic injection but keep the rest of the New Relic solution, using Helm, disable the infra-operator by setting infra-operator.enabled to false, either in the values.yaml file or in the command line (--set), and re-run the installation command above.

We strongly recommend keeping the --set global.fargate=true flag, since it does not enable automatic injection but makes other components of the installation Fargate-aware, preventing unwanted behavior.

To uninstall the whole solution:

Completely uninstall the Helm release.

Rollout the pods in order to remove the sidecar:

bash$kubectl rollout restart deployment YOUR_APPGarbage collect the secrets:

bash$kubectl delete secrets -n YOUR_NAMESPACE -l newrelic/infra-operator-created=true

Manual injection

If you've any concerns about the automatic injection, you can inject the sidecar manually directly by modifying the manifests of the workloads scheduled that are going to be scheduled on Fargate nodes. Please note that adding the sidecar into deployments scheduled into EC2 nodes may lead into incorrect or duplicate data, especially if those nodes are already being monitored with the DaemonSet.

These objects are required for the sidecar to successfully report data:

- The

ClusterRoleproviding the permission needed by thenri-kubernetesintegration. - A

ClusterRoleBindinglinking theClusterRoleand the service account of the pod. - The secret storing the New Relic

licenseKeyin each Fargate namespace. - The sidecar container in the specific template of the monitored workload.

팁

These manual setup steps are for a generic installation. Before completing these, take a look at the Configuration section below to see if you want to modify any aspects of the automatic injection.

Complete these steps for manual injection:

ClusterRole

If the ClusterRole doesn't exist, create it, and grant the permissions required to hit the metrics endpoints. You only need to do this once, even for monitoring multiple applications in the same cluster. You can use this snippet as it appears below, without any changes:

apiVersion: rbac.authorization.k8s.io/v1kind: ClusterRolemetadata: labels: app: newrelic-infrastructure name: newrelic-newrelic-infrastructure-infra-agentrules: - apiGroups: - "" resources: - nodes - nodes/metrics - nodes/stats - nodes/proxy - pods - services verbs: - get - list - nonResourceURLs: - /metrics verbs: - getInjected sidecar

For each workload you want to monitor, add an additional sidecar container for the newrelic/infrastructure-k8s image. Take the container of the following snippet and inject it in the workload you want to monitor, specifying the name of your FargateProfile in the customAttributes variable. Note that the volumes can be defined as emptyDir: {}.

팁

In the special case of a KSM deployment, you also need to remove the DISABLE_KUBE_STATE_METRICS environment variable and increase the resources requests and limits.

apiVersion: apps/v1kind: Deploymentspec: template: spec: containers: - name: newrelic-infrastructure env: - name: NRIA_LICENSE_KEY valueFrom: secretKeyRef: key: license name: newrelic-newrelic-infrastructure-config - name: NRIA_VERBOSE value: "1" - name: DISABLE_KUBE_STATE_METRICS value: "true" - name: CLUSTER_NAME value: testing-injection - name: COMPUTE_TYPE value: serverless - name: NRK8S_NODE_NAME valueFrom: fieldRef: apiVersion: v1 fieldPath: spec.nodeName - name: NRIA_DISPLAY_NAME valueFrom: fieldRef: apiVersion: v1 fieldPath: spec.nodeName - name: NRIA_CUSTOM_ATTRIBUTES value: '{"clusterName":"$(CLUSTER_NAME)", "computeType":"$(COMPUTE_TYPE)", "fargateProfile":"[YOUR FARGATE PROFILE]"}' - name: NRIA_PASSTHROUGH_ENVIRONMENT value: KUBERNETES_SERVICE_HOST,KUBERNETES_SERVICE_PORT,CLUSTER_NAME,CADVISOR_PORT,NRK8S_NODE_NAME,KUBE_STATE_METRICS_URL,KUBE_STATE_METRICS_POD_LABEL,TIMEOUT,ETCD_TLS_SECRET_NAME,ETCD_TLS_SECRET_NAMESPACE,API_SERVER_SECURE_PORT,KUBE_STATE_METRICS_SCHEME,KUBE_STATE_METRICS_PORT,SCHEDULER_ENDPOINT_URL,ETCD_ENDPOINT_URL,CONTROLLER_MANAGER_ENDPOINT_URL,API_SERVER_ENDPOINT_URL,DISABLE_KUBE_STATE_METRICS,DISCOVERY_CACHE_TTL image: newrelic/infrastructure-k8s:2.4.0-unprivileged imagePullPolicy: IfNotPresent resources: limits: memory: 100M cpu: 200m requests: cpu: 100m memory: 50M securityContext: allowPrivilegeEscalation: false readOnlyRootFilesystem: true runAsUser: 1000 terminationMessagePath: /dev/termination-log terminationMessagePolicy: File volumeMounts: - mountPath: /var/db/newrelic-infra/data name: tmpfs-data - mountPath: /var/db/newrelic-infra/user_data name: tmpfs-user-data - mountPath: /tmp name: tmpfs-tmp - mountPath: /var/cache/nr-kubernetes name: tmpfs-cache[...]When adding the manifest of the sidecar agent manually, you can use any agent configuration option to configure the agent behavior. For help, see Infrastructure agent configuration settings.

ClusterRoleBinding

Create a ClusterRoleBinding, or add to a previously created one the ServiceAccount of the application that is going to be monitored. All the workloads may share the same ClusterRoleBinding, but the ServiceAccount of each one must be added to it.

Create the following ClusterRoleBinding that has as subjects the service account of the pods you want to monitor.

팁

You don't need to repeat the same service account twice. Each time you want to monitor a pod with a service account that isn't included yet, just add it to the list.

apiVersion: rbac.authorization.k8s.io/v1kind: ClusterRoleBindingmetadata: name: newrelic-newrelic-infrastructure-infra-agentroleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: newrelic-newrelic-infrastructure-infra-agentsubjects: - kind: ServiceAccount name: [INSERT_SERVICE_ACCOUNT_NAME_OF_WORKLOAD] namespace: [INSERT_SERVICE_ACCOUNT_NAMESPACE_OF_WORKLOAD]Secret containing

Create a secret containing the New Relic . Each namespace needs its own secret.

Create the following Secret that has a license with the Base64 encoded value of your . One secret is needed in each namespace where a pod you want to monitor is running.

apiVersion: v1data: license: INSERT_YOUR_NEW_RELIC_LICENSE_ENCODED_IN_BASE64kind: Secretmetadata: name: newrelic-newrelic-infrastructure-config namespace: [INSERT_NAMESPACE_OF_WORKLOAD]type: OpaqueManual injection: Update to the latest version

To update any of the components, you just need to modify the deployed yaml. Updating any of the fields of the injected container will cause the pod to be re-created.

중요

The agent cannot hot load the New Relic . After updating the secret, you need to rollout the deployments again.

Manual injection: Uninstall the Fargate integration

To remove the injected container and the related resources, you just have to remove the following:

- The sidecar from the workloads that should be no longer monitored.

- All the secrets containing the newrelic license.

ClusterRoleandClusterRoleBindingobjects.

Notice that removing the sidecar container will cause the pod to be re-created.

Logging

New Relic logging isn't available on Fargate nodes because of security constraints imposed by AWS, but here are some logging options:

- If you're using Fluentbit for logging, see Kubernetes plugin for log forwarding.

- If your log data is already being monitored by AWS FireLens, see AWS FireLens plugin for log forwarding.

- If your log data is already being monitored by Amazon CloudWatch Logs, see Stream logs using Kinesis Data Firehose.

- See AWS Lambda for sending CloudWatch logs.

- See Three ways to forward logs from Amazon ECS to New Relic.

Troubleshooting

View your EKS data

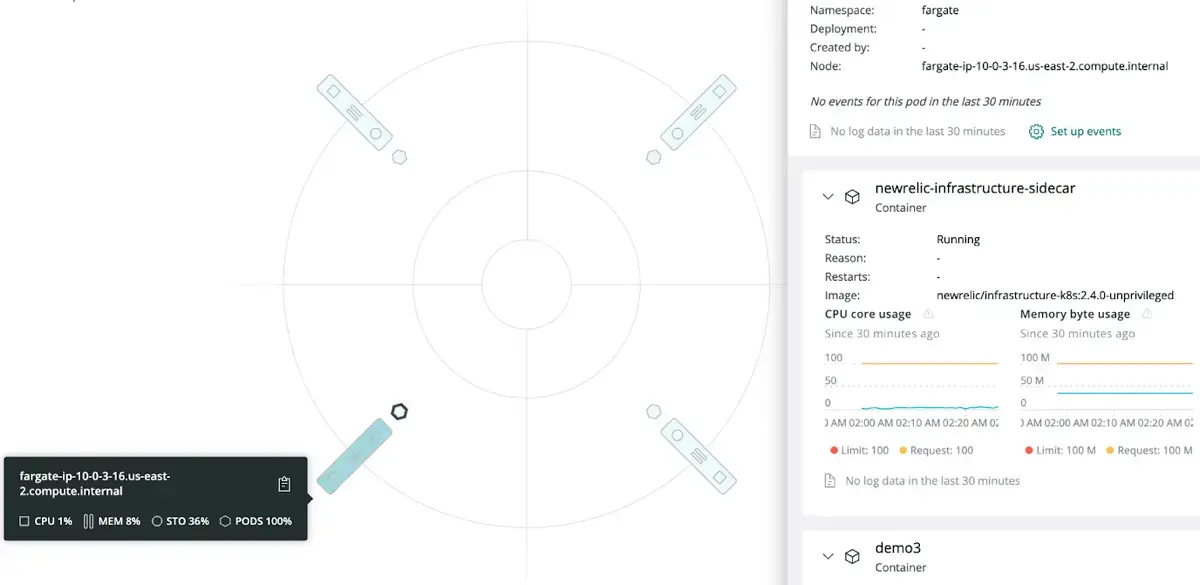

Here's an example of what a Fargate node looks like in the New Relic UI:

To view your AWS data:

Go to one.newrelic.com > All capabilities > Infrastructure > Kubernetes and do one of the following:

- Select an integration name to view data.

- Select the Explore data icon to view AWS data.

Filter your data using two Fargate tags:

computeType=serverlessfargateProfile=[name of the Fargate profile to which the workload belongs]